(Fall 2021) Initial Idea

(Spring/Summer 2022) USRC Competition and Grant

(Fall 2022) Design and Procurement

(Spring 2023) Manufacturing and Manual Flight

The TDEL team is gearing up for manual flight in the very near future. In the meantime, progress reports of all teams are listed below:

Hardware has completed assembly of the airframe, mounted the motors, and fastened propellers, and began soldering and connecting electrical components: specific components include the ESC, PDB, Pixhawk, and Jetson. During construction of the first drone, the team designed custom mounts, rotor guards, and a cover based on relevant components. Additionally, they identified off-the-shelf components that could be redesigned and replaced in the long-term to increase effectiveness.

The communication and sensors team has created a finalized wiring diagram of the electrical components of the drone, updated the sensors list, and finished the soldering process and pairing of the transmitter and receiver.

The simulation and estimation team has focused on research of integration with sensors and has started the transfer of the existing MATLAB simulation to C++. Additionally, the team is preparing the drone for manual flight by flashing firmware and programming the flight plan.

(Fall 2023) Drone Prototype 2, Real-Time Data Acquisition, Estimation Progress

Hardware has ordered 4 new drones with improved capabilities over the previous iteration of the drone. Upgrades include an improved flight controller with fewer soldered joints, a GPS module, and a camera. These upgrades will not only give the team practice with installing new systems to the drones, but also give the drone more sensors for estimation analysis.

Real-time data acquisition has taken form during the fall of 2023 using simulation and hardware. Simulation is based on a the PX4 firmware running on a laptop, commanding a drone in the physics simulator Gazebo. Hardware currently relies on a telemetry radio architecture. The software architecture is based on ROS1 written in Python running on Ubuntu 20.04.

(Spring 2024)

Spring 2024 DEL Team

Conferences and events attend by DEL in Spring 2024:

- CSE Endowment Project Showcase

- AIAA Region IV Student Conference – Placed 2nd

- Annual Perkins Paper Plane Competition and Lunch – Project Showcase

- 2024 ASE/EM Academy of Distinguished Alumni – Senior Capstone Showcase

(Fall 2024)

Fall 2024 Major Objectives

- Full porting of code to Python and integration into drone

- Visualization code finalization

- Integration of multiple sensors into estimation programs.

- USRC Project Proposal Submitted: “Orbital Trajectory Analysis and 3D Satellite Damage Assessment via Computer Vision and LiDAR”

Inventory of our current components. Located here.

(Spring 2025)

Spring 2025 Major Objectives

- Acceptance to AIAA Region IV Student Conference

- Completion of Research Paper: “Autonomous Drone Navigation in GPS-Denied Environments Using Depth Cameras”

- Attendance of Conference March 28-29

- Development and progression of drone hub and swarm projects post-conference

(Future Plans) Path Planning and Swarms

These are ideas possible for future projects, once the initial grant is complete.

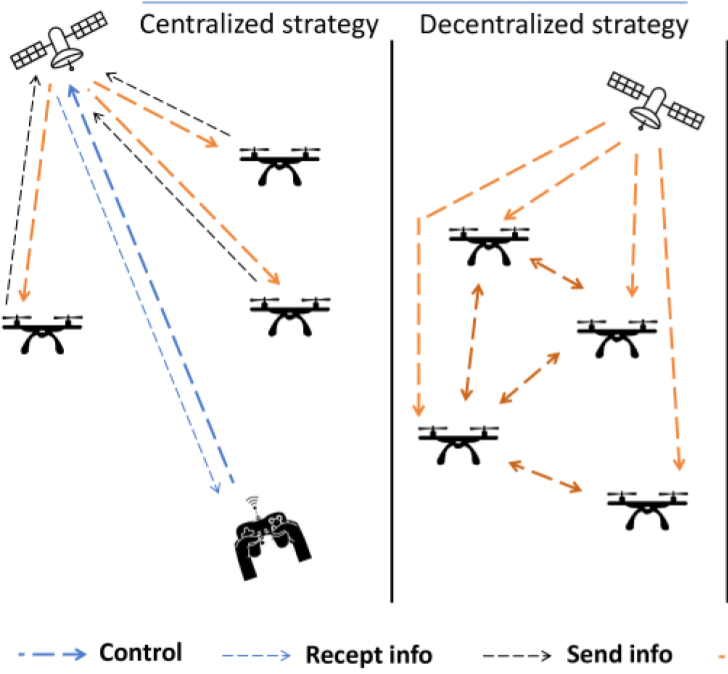

Swarms – Once we have set-up the Kalman filter algorithm to successfully determine our position with minimal error, we can start looking at expanding the project. The first step would be to form a swarm network. While a centralized network would be easier to start with, eventually working on a decentralized network will prove to be more robust. This could involve designing a mesh network that enables drones to relay information to one another, ensuring robust communication even when individual drones are out of range or compromised.

The first step for this future plan would be to invest in a few more drone parts, meaning raising money in some way. This would either be a Hornraiser or applying to various student research grants which might allow for enough funding for said drones. The next step after that towards such a goal might be testing and verification of estimation methods, specifically ensuring visualization works with live data. Furthermore, live and drone integrated estimation is a requirement for having multiple drones in a GPS denied environment moving synchronously.

Path Planning – Once we have a swarm, we can develop path planning algorithms. The path planning problem is where a robot, with certain dimensions, is attempting to navigate between point A and point B while avoiding the set of all obstacles. The robot is able to move through the open area which is not necessarily discretized. Rapidly-exploring random trees (RRT) is a common option in path planning wherein a robot explores by randomly choosing points in a space, connecting them to its current position while avoiding obstacles. It keeps doing this until it reaches its goal. RRT* is a better, more optimized method.

Cameras mounted on drones can significantly enhance autonomous navigation by providing real-time visual feedback, which allows the drone to detect and avoid obstacles efficiently. This visual data can be processed using advanced algorithms to optimize flight paths, ensuring safer and more precise maneuvers. By integrating visual feedback into our drone’s sensors, we can more accurately calculate changes in position and velocity. This would enhance the overall accuracy of our position estimation methods which supports our lab’s goal of developing robust location estimation methods. For now, however, path planning is a long-term project which takes a lower priority than using the sensors already integrated in the drone due to its inherent complexity.