Check out our past and ongoing projects below.

Ongoing

Drug Delivery through the Blood Brain Barrier with MSC Exosomes

Problem Statement

Glioblastomas (GBMs) are one of the most feared cancers due to their overwhelming invasiveness, heterogeneity, and recurrence rate. In addition, the existence of the blood brain barrier (BBB) makes it difficult to efficiently dose and target tumors in the brain. Exosomes, however, are one of the few acellular biologics that can efficiently cross the BBB. Their unique potential comes from their ability to carry molecular cargo within them and serve as a potential therapeutic. Accordingly, our current project is to leverage exosomes as a potential therapeutic for GBMs. Currently we are conducting a review on exosomes in relation to the BBB and GBMs to help guide our initial experiments where we aim to discover the mechanism of exosome transport across the BBB.

iCare: Lowering the Cost of Vision Diagnostics

Design Problem

The design problem is to create an affordable liquid-lens phoropter, addressing the high cost and limited accessibility of traditional phoropters. With eye care being a crucial aspect of public health, especially in underserved areas, the need for accessible vision testing equipment is paramount. Traditional phoropters, with their complex lens systems and high price tags, present significant barriers to entry for smaller clinics and practitioners. This restricts access to essential eye exams and prescription services, particularly in rural and economically disadvantaged regions.

Design Criteria

- Affordability: Our goal cost is under $200, as this would be about 1/15th the cost of the average phoropter and lower than anything we could find for sale, even for used products.

- Accuracy: We aim to get accuracy with the spherical and cylindrical powers to within 0.25D and accuracy with the cylindrical axis to within 1 degree.

- Ease of Use: The device has to be able to conduct assessments at a similar rate to traditional phoropters, so around 5–10 minutes, and it should be easy to learn how to operate.

Current Status

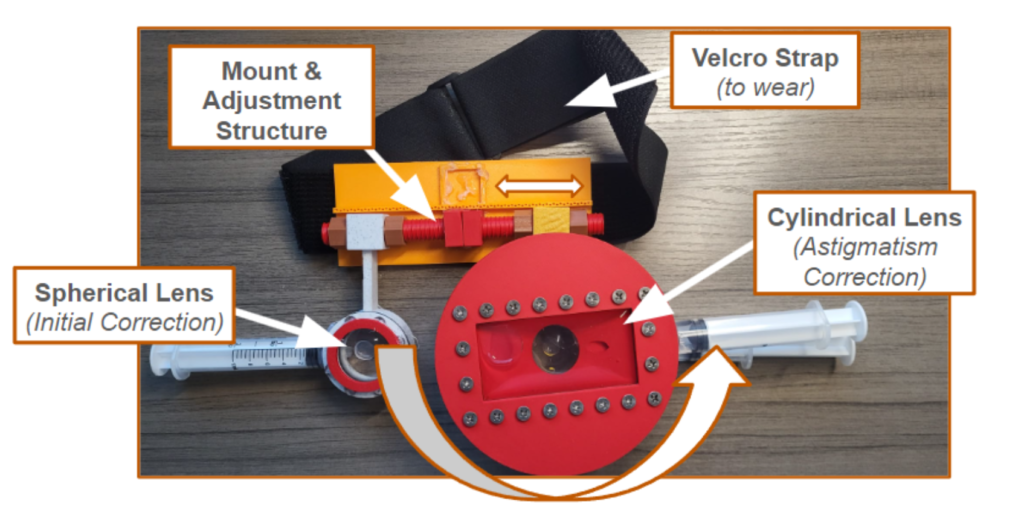

To bring down the cost of the phoropter to 200 dollars, we have opted to implement a liquid lens solution that is manual instead of using linear actuators, as these significantly add to the cost. Through implementing a gear ratio, the product can shrink linear motion to allow the user to make adjustments with a large slider that corresponds to millimeter adjustments of the phoropter. Current liquid-lens phoropters show success in terms of the accuracy of the phoropter, and a manual system allows the phoropter to work without power; however, one limitation is the ease of use. Given that we are using manual sliders instead of controlled actuators, the administrator of the eye exam has to manually adjust the prescription.

Developing an Interactive Web Application to Increase Awareness for Rare Diseases

Background

Approximately 7000-8000 rare diseases affecting 30 million patients in the US and 300 million worldwide. 80% of the disease have a genetic background. Complex pathology of diseases- conditions/symptoms can overlap with other common diseases.

Problem

An isolated community of patients due to risk and uncertainty. Lack of R&D due to small market. Complex patient journey-decades long wait to diagnosis, treatment side effects, social/emotional burdens, and financial challenges.

Solution

Despite growing research efforts, a lack of fundamental understanding of rare diseases remains widespread. To promote awareness for rare diseases, this project proposes a user-friendly, interactive web application to deliver educational information regarding diseases.

Past

SUTR: Surgical Training Tools for Congenital Cardiac Surgery

Problem Statement

Congenital heart disease (CHD) has a substantial burden as about 1 million children each year are born with CHD. To treat these babies, an open-heart surgery is necessary, and these procedures can be one of the most specialized techniques, requiring effective and highly trained professionals. While there are some highly successful programs in pediatric heart surgery in high-income areas, training the next generation of surgeons and allowing treatment opportunities for areas that lack these programs is a challenge that is faced globally. Additionally, surgical training tools are currently rapidly emerging in different specialties of surgery because of their role in creating skilled surgeons with less time and resources. Working with two surgeon collaborators Dr. Carlos Mery and Dr. Ziv Beckerman, trained specialists in pediatric cardiac and congenital surgery, this project will attempt to create surgical training tools that allow residents and trainees interested in this specialty to get more experience with the hand movements and motions needed during the operation. The system will involve a device that provides trainees tactile/haptic feedback assisting them to produce the movements comparable to those of the experienced surgeons.

Current Progress

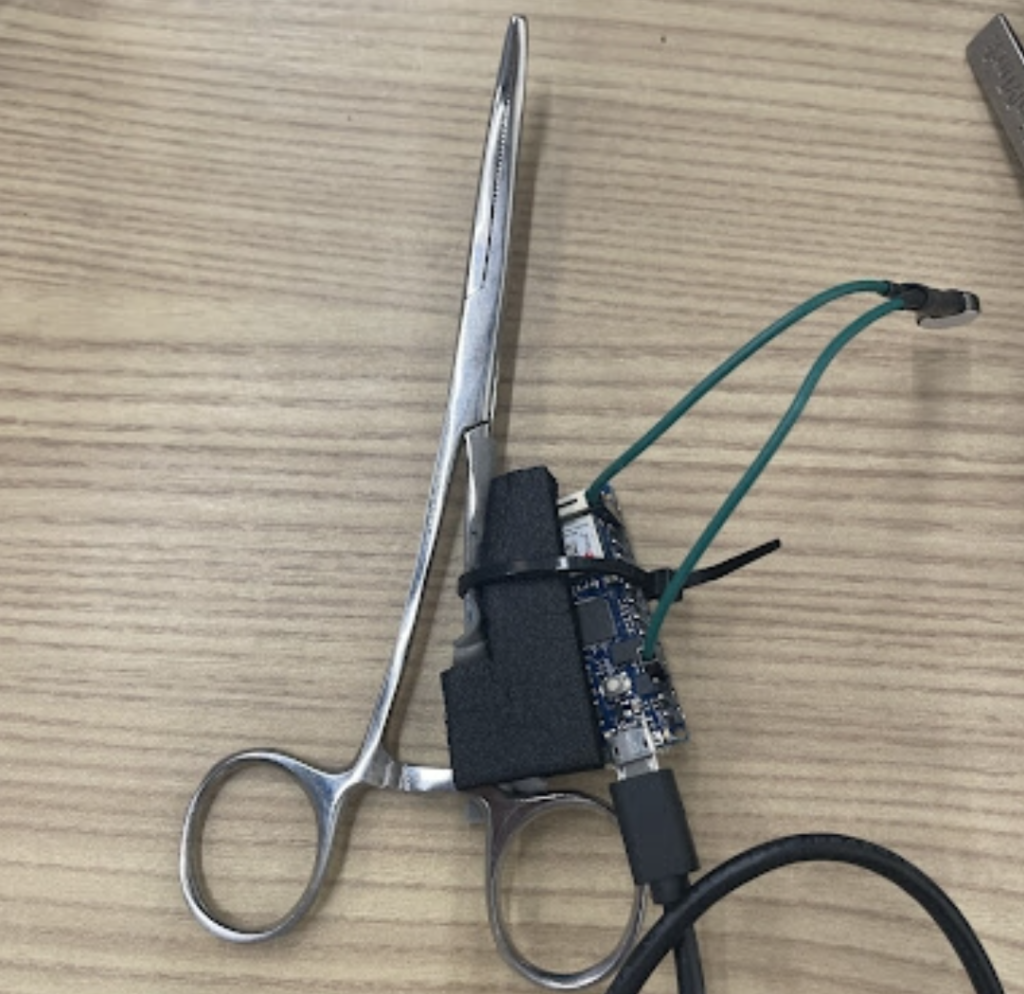

Currently, after a couple of meetings with the clinical collaborators, an initial prototype, which involves accelerometer and gyroscopes to track the motion of the user using instruments utilized during surgery and provides haptic feedback to alert the user if their motions are out of the optimal ranges. The team is planning to observe procedures to get more information on the movements of the surgeons and get realistic tools utilized during the procedures.

Child Neurohabilitation

Problem Statement

In 2020, the 4 year old daughter of our research mentor suffered a traumatic brain injury that resulted in motor and cognitive disability. The goal of our project is to restore the patient’s ability to grasp objects with her left hand. Functional electrical stimulation (FES) invovles the electrical stimulation of muscles for the purpose of regaining certain motor function (e.g. hand grasping, arm extension,…etc…). Although there has been research in using FES to help stroke patients recover more quickly, little research on the usefuleness of FES on children has been conducted. Moreover, the effectiveness of FES on a child patient over a year past the trauma event is unknown.

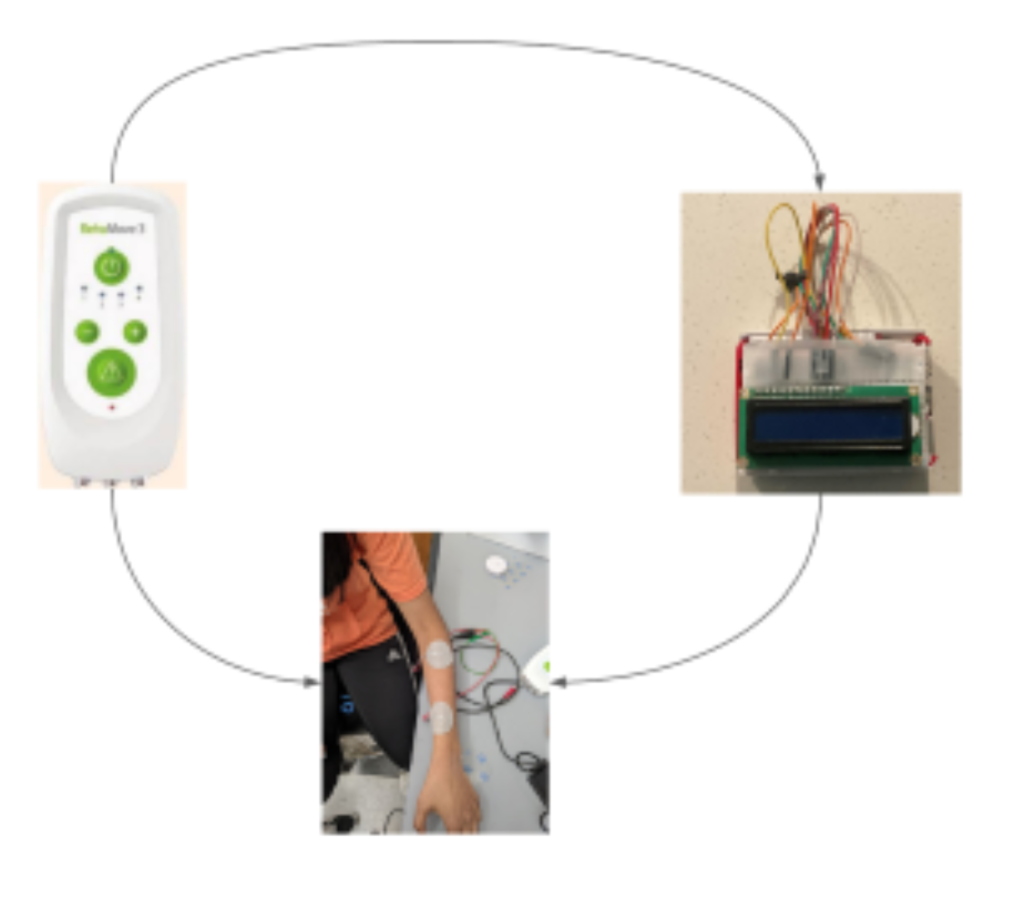

Our current approach involves two components: a neural decoder and FES stimulator. The neural decoder takes the EMG signal as input and infers whether the patient is trying to open or close her hand. The FES stimulator then applies a stream of electrical pulses to stimulate the muscles to open.

Low-Cost Drug Refridgeration

Problem Statement

A consistent supply of electricity in lower to middle income countries (LMIC) is not guaranteed, which puts strain on essential areas such as hospitals and clinics. An inconsistent electrical supply in hospitals and clinics causes a myriad of problems, ranging from machine outage to lack of water and light. Particularly, refrigeration is especially important in preventing the degradation of drugs, samples, and biomaterials. Proper refrigeration is essential for the storage of life-saving drugs and research samples, yet the process of modern refrigeration consumes high amounts of electricity. To address this problem, our team is developing a refrigerator capable of storing drugs between 2° to 8°C while using minimal electricity.

Click here for a short video summary of the drug refridgeration issue and our proposed solution!

Current Progress

Currently, we are perusing research literature in order to gather a preliminary list of insulation materials, cooling methods, electrical and non-electrical components, and mechanisms needed to create a prototype.

Needle Thoracostomy Training Tools with Haptic Feedback

Problem Statement

Needle thoracostomy is a life-saving procedure due to its ability to decompress tension pneumothorax which consists of air trapped between the lungs and chest wall. If untreated, this condition almost always leads to death as the trapped air obstructs function of both the heart and lungs. However, the task itself is extremely sensitive due to being in proximity of vital organs and can worsen patient morbidity under an untrained operator. This risk further increases when considering that a pneumothorax requires immediate attention and thus inexperienced individuals often end up performing it. Thus, we are developing a vibrotactile glove to simulate needle thoracostomy through haptic touch to remotely train those who may be inexperienced such as first responders and new medical practitioners. The glove will provide a mentee with vibrational cues from a mentor to guide them in successfully completing the task. Currently, we are designing and evaluating various ways the mentee receives haptic feedback to ensure cues are intuitive as well as building a prototype which remotely streams position data.

Soft Robotics for Wound Healing

Problem Statement

We are developing a soft robotic device that will assist in wound healing. There are many bandage based adhesive products that mechanically stimulate tissue growth. However, we aim to automate individual arms as pneumatic artificial muscles that can better promote uniform tissue growth and healing by applying modulated tension in response to growing tissue. We also aim to minimize scar formation with this method. This device has the potential to help both with at-home wound care and larger-scale burns and lacerations. We are currently working with Dr. Ann Fey and the Human-Enabled Robotic Technology lab to build pneumatic artificial muscles with low-cost materials such as mesh tubing, zip ties, and tension springs. Our next steps are to connect the muscles to a fluidic control board and eventually miniaturize the prototype.

Leukemia Detection

Problem Statement

Every year, 65% of all deaths associated with leukemia occur in developing countries. Many of these deaths are preventable with early detection, proper treatment, and accurate diagnoses, yet there is little infrastructure in place to accurately detect leukemia in developing nations. The process for diagnosing leukemia can be performed through various tests. Manual diagnoses are done by experienced medical professionals/trained operators for physical examinations, blood tests, blood counts, bone marrow and cerebrospinal fluid biopsies. However, there are many problems with current diagnosing procedures and processes. Manual diagnosing is time-consuming, tedious, and prone to errors. To combat these issues and address the need for improved leukemia diagnostic techniques in developing countries, we sought to create a machine learning-based (ML) solution targeted at detecting leukemia from blood smear images taken by our low-cost phone-based microscope.

Implementation

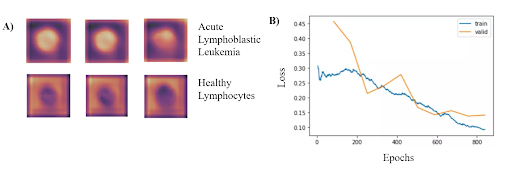

Neural Network Architecture

In order to identify blood cells with acute lymphocytic leukemia (ALL), we framed the leukemia detection as a binary classification task where we must classify each blood cell as either ALL or healthy (HEM). We used ResNet-34 model pretrained on the ImageNet dataset. Freezing all parameters except for the last MLP later, we then trained this model on the publicly available Cancer Imaging Archive ALL dataset, which contains 15,135 segmented and labeled images of immature leukemic blasts and healthy lymphocytes derived from blood smears. To enhance model performance, an SGD optimizer with Nesterov momentum and a learning rate of 0.010 was used for 10 epochs on a batch size of 64 images. Input images were 257x257x3 pixels with RGB channels. To obtain the performance metrics, ten-fold cross-validation was performed.

Based on the results from the binary classification, our model consistently classified cancerous lymphoblasts from healthy lymphocytes (ALL), with an accuracy of 95.72 ± 0.68% (mean ± std). This value was comparable to standard cytochemical diagnostics of acute lymphoblastic leukemia, which exhibit accuracies ranging from 86-97%. Other metrics indicating successful performance included a sensitivity of 97.24 ± 0.31%, specificity of 92.65 ± 0.74%, F1 score of 95.55% ± 0.63%, and area under the curve of 0.9922 ± 0.011. The CNN can run locally on a low-grade smartphone, with an interface allowing the user to photograph the blood smear and view previous and current results from the model. The figure on the bottom left demonstrates where in the cells that the classifier is looking at to determine if has Leukemia. Additionally, the figure on the bottom right shows the training and validation losses associated with the training of the classifier.

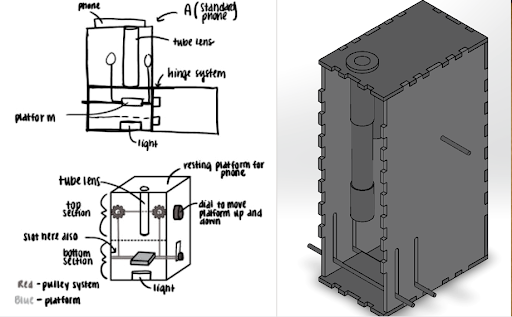

Slide Imaging Device

After analyzing various microscopy techinques, we deteremined that brightfield microscopy was the cheapest and the safest. Like traditional optical microscopes, we used convex lenses in our prototype and used the manufacturer specifications to determine the approximate distances between the lenses. We made a dual lens system and to determine the distance between them was calculated using focal lengths, image distance, and the nearpoint of the eye. For the microscope tube, we used 3D printed PLA plastic in order to get the exact dimensions. For the platform movement system, a simple pulley system was designed. The most important aspect of the pulley system is making sure both sides of the platform raise and lower equally, and to overcome this hurdle, we created a design which uses a single axle to support and adjust both sides of the pulley which can be seen. Lastly, we made sure there was ample space for the light source to be switched out if necessary.

For the microscope, once a sample of blood is obtained, the first step is to dye the sample using hematoxylin. We will then open up the front of the microscope and place the slide holding the sample onto the platform. Next, we turn on the light source to illuminate the sample. The phone is placed on the top of the eyepiece. From here, we simply adjust the platform height using the pulley system until the image is brought into focus.

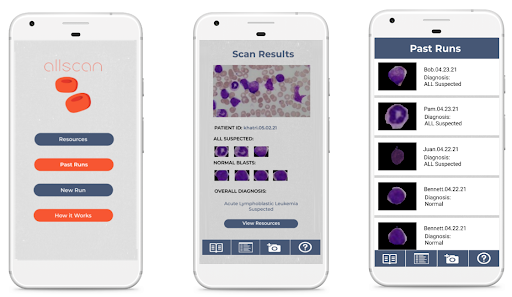

Mobile App

A key part of ensuring that our detection algorithm was accessible to our target audience was developing an easy-to-use mobile app that was capable of running on low-cost smartphone devices. In order to develop this, we utilized React Native, a cross-platform app development framework with capabilities to easily create and deploy apps capable of running on any grade or type of smart device. Another important aspect of creating a viable user interface for developing nations was ensuring that the algorithm would be able to run locally without a need for data, wifi, or cloud computing resources. To meet this goal, we utilized TensorFlow Lite, an open-source platform with the ability to allow for on-device inference.

Using the hardware microscope and mobile device, first a user-taken or selected image of a Giemsa-stained thin blood smear is uploaded to the mobile app. Next, the model isolates the lymphocytes in the image based on differences in staining using a masking method in which all parts of the image but the cell in consideration are blacked out. Following initial masking, each lymphocyte is classified as exhibiting characteristics of ALL or as healthy using the binary classification model. This process is then automatically repeated for all lymphocytes within the cell. Following the image processing, the number of uninfected and infected lymphocytes is displayed as shown in the figure below. This data is stored directly on the phone in a format lower than 200 MB file restriction to allow the user to view past results at any time. In addition to the actual classification, the phone suggests if the individual may have ALL and shows relevant resources and health organizations to contact in order to ensure that they know the next steps required to receive treatment. The app also includes instructions for obtaining and staining a blood smear, instructions on how to use the microscope, and options to order replacement parts. Several screens from the mobile app are shown below.

Future Improvements

In order to improve, our leukemia detection model, we need to look into newer neural network architectures to see if the accuracy improves. One drawaback of neural networks is the inability to measure uncertainty. If we were able to generate a quantitative measure of uncertainity (i.e. Gaussian processes), this would help our users determine how much to trust our model’s decision. Finding ways to improve the resolution of our microscope will also help improve our accuracy.

Seizure Detection

Problem Statement

Epilepsy is a chronic and severe neurological disease that affects 65 million people worldwide. Although idiopathic in many cases, epileptic seizures may be caused by previous trauma to the brain such as hypoxia during labor, head injury, and/or bacterial meningitis. Beyond physiological symptoms caused by excessive neuronal activity in the brain, epilepsy is frequently the subject of social stigma, with patients often suffering from discrimination, misunderstanding, and ostracization from wider society due to the loss of self-reliance and autonomy. Although many cases can successfully be treated, treatment is often too inaccessible or expensive for patients, preventing them from treating the disease as well as mitigating its symptoms.

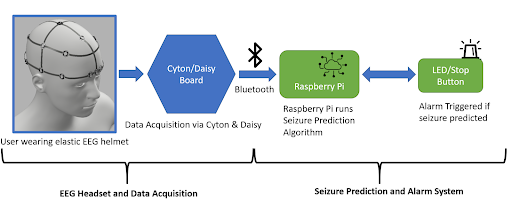

To address the treatment gap for epileptic seizures in developing low and middle-income nations, we propose a simple and efficient seizure prediction system consisting of a neural network and an associated low-cost wearable alarm system. The integral comfort and safety features associated with this system would greatly reduce the mortality rate from SUDEP and general injury. By working towards this goal, we intend to allow patients to not only receive any possible urgent biomedical treatment in advance of the seizure, but also mitigate any potential injury from a sudden fall by simply laying prone.

Implementation

EEG Headset

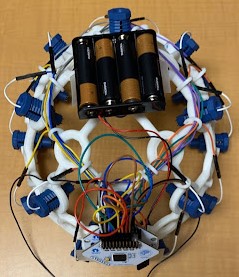

The electrodes necessary for EEG collection were procured from OpenBCI, a non-profit organization that seeks to lower the cost of EEG and other brain wave collection technologies. The electrodes (Figure 1) are encased in a plastic holding, where an elastic spring pushes the electrode into the user’s scalp. This design ensures that sufficient surface contact between the electrode and skin is maintained. Sixteen such electrodes are arranged on the helmet following the 10-20 brain EEG system.

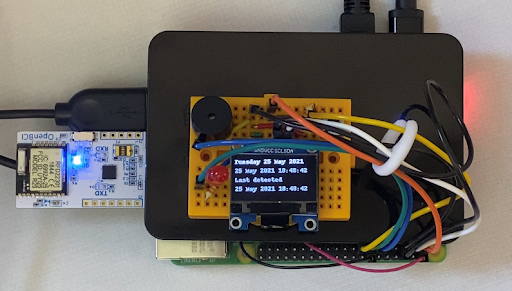

Alarm System

The alarm system consists of an LED, a piezo speaker, an SSD1306 OLED graphic display, and a button-press switch. As shown in the figure below, the circuit is wired to a Raspberry Pi 4, which is also loaded with the machine learning model architecture used for this project. The software that activates the circuit is integrated with the machine learning algorithm within the Raspberry Pi. Once the model detects a seizure from the preictal data streamed from the Cyton microcontroller of the EEG to the Raspberry Pi, the LED and buzzer begin to produce an auditory and visual signal to the user that there could be an onset of a seizure, and the OLED display outputs a warning message as well as the timestamp of when the seizure was detected as seen in the figure below. The date and time at which a seizure is detected by the system is also stored in a .txt file for future reference. The button press switch is attached to the circuit for user convenience, and can be used to turn off the sound or blinking LED. For notification purposes, the timestamp and date from the last seizure warning will still be in display as “last detected” so that users are still aware of their seizure warning and can take the necessary precautions in time. The alarm system is miniature in size and therefore provides a higher level of portability and comfort. The alarm system will be attached to the Raspberry Pi in a compact case and can be worn around the arm or wrist.

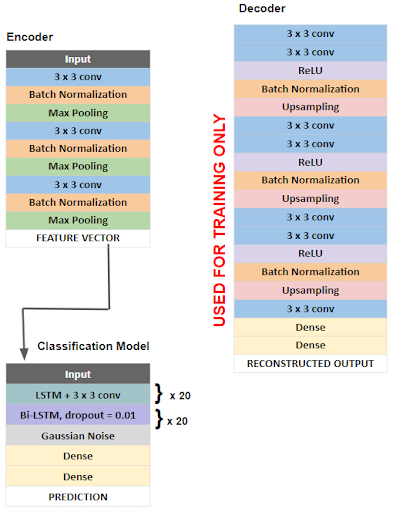

Neural Network Architecture

The overall architecture of our model is outlined in the figure below. First a deep convolutional autoencoder (DCAE) was implemented and trained in an unsupervised manner on unlabeled, raw 5 second EEG signals. A deep convolutional autoencoder consists of an encoder that compresses input data into a low-dimensional representation and a decoder that reconstructs data back to its original dimensions. We chose this architecture as a method of feature extraction, as encoded EEG signals would serve as feature vectors that are more easily learned by the classification algorithm.

Our encoder consists of convolutional layers with batch normalization, reLU activation, and max pooling layers which reduce the input EEG segments of 1280 time points x 23 channels to a low dimensional representation or encoded product of shape 160 x 184. The decoder then reconstructs the input signal through deconvolutional and upsampling layers. The DCAE is trained using the input signal and mean square error loss function which measures the loss associated with the reconstruction of the input signal. The Adam optimizer with a learning rate of .01 is used to minimize this loss function. After training and optimization, optimized parameters are used on a pretrained model consisting only of the encoder layers, which compresses the raw EEG signal epochs into low dimensional feature vectors, which serve as inputs for the classification model. The classification task is done using a bi-directional long short term memory (bi-LSTM) recurrent neural network. The Adam optimizer with a learning rate of .0002 and the binary cross entropy loss function were used. Additionally, in order to reduce overfitting, we implemented residual or skip connections within the bi-LSTM units.

After training, our model was able to achieve a validation accuracy of 96.59%, a validation precision of 97.72%, and a validation sensitivity of 97.72%.

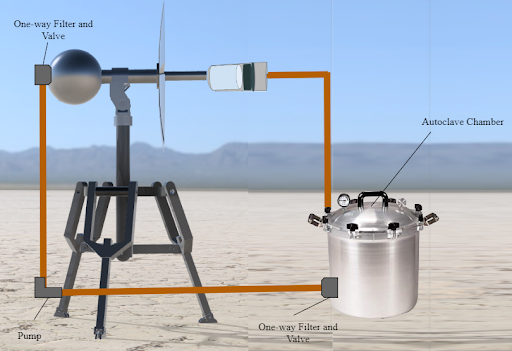

Solar Powered Autoclave

Problem Statement

In developing countries, there are limited affordable and accessible methods for sterilizing medical equipment despite the fact that many medical procedures, such as surgeries and immunization shots, require the use of sterile equipment. Traditional autoclaves are used in hospitals and laboratories for sterilizing equipment, but they consume a significant amount of power to create the pressurized steam. This inhibits their usage in developing countries, where access to electric power is limited To address this need, we developed a solar-powered autoclave that can concentrate sunlight and create steam for effective sterilization of medical equipment found in the autoclave chamber. This was done through the creation of a reflective, parabolic concentrator dish with detachable blades, a modified pressure-cooker sterilization chamber, and a tripod design allowing for portability. Therefore, the solar-powered autoclave device fulfilled the purpose of providing a low-cost and low-energy option to sterilize medical equipment in developing countries.

Implementation

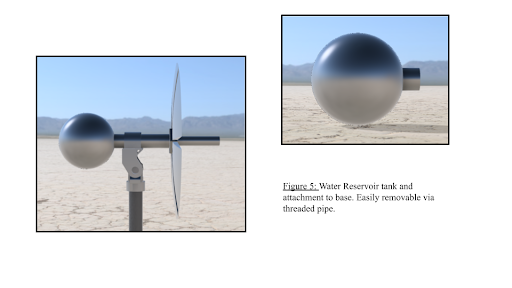

The key component in our device that separates it from similar devices modularity. This allows for easy transportation of the device when manufacturing, easy movement of the device when in use, and provides greater flexibility of situations in which the device can be used. To achieve this the device is almost entirely collapsible due to threaded pipes and the removable blades. The parabolic dish was designed to be 4 ft wide to optimize the amount of sunlight we were able to concentrate and had a curvature of 72 degrees to ensure light was focused to a concentrator 3 feet away from the center of the dish.

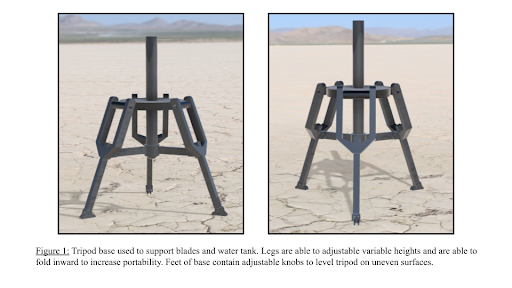

Tripod Support

The foundation of our device is the three-legged tripod used to support the solar concentrator as well as the water reservoir. The adjustable height and ability to level the device make it extremely adaptable to almost all surfaces and allows for convenient placement of the device.

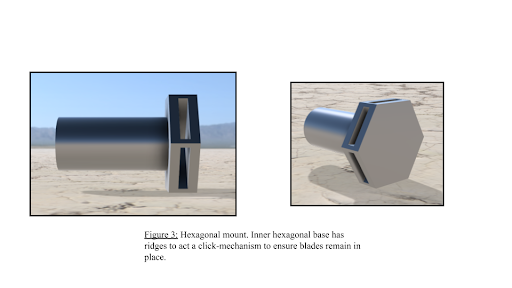

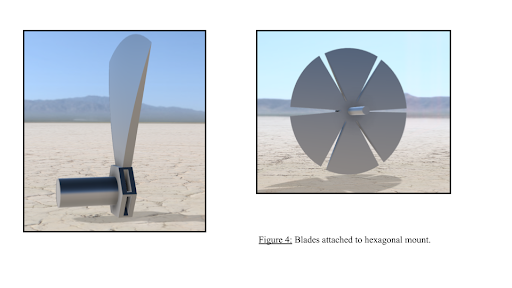

Hexagonal Base

The key component that allows the blades of the solar concentrator to be removed and placed with ease. The pole-arm which the hexagonal base is attached to is threaded to allow it to easily be attached to the tripod attachment component. The base contains six slots to allow the blades of the solar concentrator to be easily removed/placed. Each slot is designed in a way to allow the blades to be secured in place via a “click mechanism”. The blades that form the solar concentrator are designed to be easily attachable to the hexagonal mount for ease of use. Additionally, the blades are made of lightweight aluminum that not only provides a high reflective index but also allows the device to be easily assembled/disassembled.

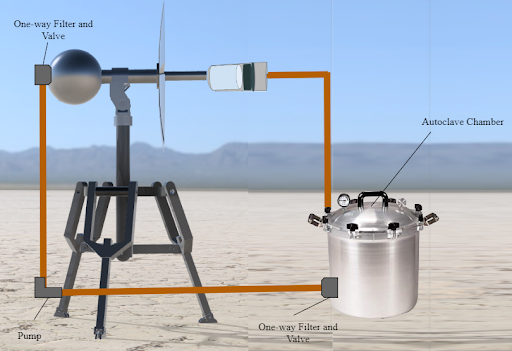

Steam Generation

To allow the device to be run continually without constant attention we included a one-foot diameter sphere that can hold roughly 15 liters of water. This reservoir also serves as a counterweight to the solar concentrator. This helps keep the center of gravity within the three legs of the tripod which prevents the possibility of tipping. To transport water from the reservoir to the concentrator and to the sterilization chamber one-inch copper tubing is used due to its ability to conduct heat and its durability. A pump is also utilized to assist the water in reaching its destination. The pump is a simple on/off pump that needs no special requirements. To allow steam to leave the autoclave and recondense into water, the pressure relief valve of the sterilization chamber is connected to a check valve to allow for one way air flow and one-inch steel tubing connecting the chamber to the reservoir. Additionally, a HEPA filter placed inside the check valve catches any particles that may have appeared in the steam before it is transferred back to the water reservoir.

Our steam generator model is composed of a few main parts allowing for water to successfully be converted to steam. As shown in Figure 4, the 3-feet of copper tubing protruding from the blades connects the reservoir to the steam generator. The steam generator is a carbon foam layer topped with a holed sheet of graphite-coated copper metal encapsulated in a plexiglass tube to enable a light-trapping system for steam generation. Although unconventional, this proprietary design was tested and found to heat water up to 86 degrees celsius by itself and allow for a rate of steam flow matching that of the requirements for autoclaves13, so when coupled with the blades concentrating sunlight, it should be able to heat the water to above the 121 °C and meet industry standards.

The sterilization chamber in which the objects needing to be sterilized are placed is simply in a 30-liter pressure cooker with a screw-on lid and 5-way lock. The chamber also contains a pressure relief valve to slowly let the steam out of the chamber to prevent over-pressure.

Ultrasonic Vision for the Visually Impaired

Problem Statement

At least 2.2 billion people worldwide suffer from blindness or visual impairment. These conditions are estimated to be four times more prevalent for low and middle income regions and the most prevalent in developing countries where conditions such as malnutrition, lack of sanitation, and inadequate health services result in a high incidence of eye disease. Some previous efforts to reduce the occurrence of blindness in third world countries include preventative screening programs like the eye-care services assessment tool implemented by the World Health Organization. However, fewer measures have been taken to provide wide-spread support for those who are either untreatable with cataract surgery, have chronic visual impairment, or those who are waiting to receive surgery. With our device, we aim to design a cost-effective, easy to use method for blind and visually impaired people to be able to detect the location of nearby objects. We are developing a haptic-feedback navigational system that utilizes ultrasonic sensors and a Bluetooth system.

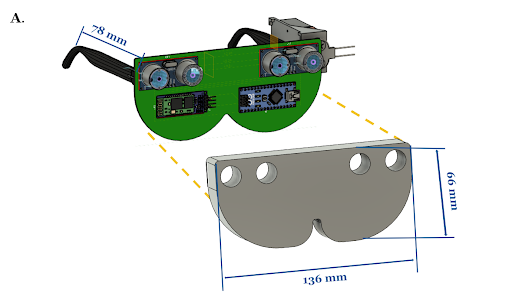

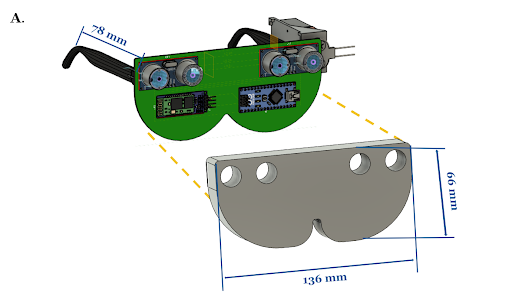

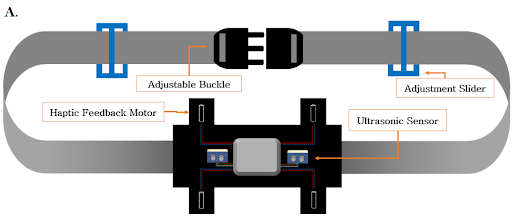

Implementation

Our device is an affordable system that consists of a belt and glasses for the detection of objects within approximately 2 m to the lower body and upper body respectively. In an effort to improve the existing condition of blindness in developing communities, our system can be used in all environments without requiring connectivity to a Wi-Fi network. As it is powered by simple batteries, it requires minimal repairs and updates, and it can be worn by virtually anyone due to its adjustable features.

When wearing the glasses and the belt, the user will be able to navigate around obstructions. Upon sensing an object within the range of the ultrasonic sensors, the device will notify the user through the use of vibrational haptic feedback. The feedback will be localized in the motors that are in the direction of the object. If the user gets closer to the object, the haptic feedback will increase in intensity, and if the user gets further from the object, the haptic feedback intensity will decrease until the user no longer feels vibration patterns due to that object. The device will send a different vibration pattern based on whether the glasses or the belt sensed the object, allowing the user to distinguish between the two and act accordingly.

Glasses

The glasses board contains two HC-SR04 ultrasonic sensors connected to an Arduino Nano. The Arduino Nano is connected to a HC-05 Bluetooth module and is powered by a 9V battery. The Bluetooth module transmits its data to the Bluetooth module on the belt board. The board is attached to two arms so that it can be worn like conventional glasses. The ultrasonic sensors are placed 5 cm apart to minimize interference and their blindspot.

The glasses continuously read in values from the two corresponding ultrasonic sensors to detect objects in the direction the user is facing. This data is sent over to the belt serially through Bluetooth. Values from the left sensor are sent as odd values, and values from the right sensor are sent as even values so that the belt module can differentiate between the two sensors.

Belt

The TCA9548A multiplexer is connected to four separate DRV2605L motor drivers and utilizes the Inter-Integrated Circuit (I2C) communication protocol to transmit signals to each vibrating motor to begin haptic feedback. In addition to these components, the belt board also consists of two HC-SR04 ultrasonic sensors, an Arduino Nano, a 9V battery, and one of the HC-05 Bluetooth modules. The ultrasonic sensors are placed 17 cm apart in order to minimize interference and their blindspot, and the 40° downward angle is maintained with foam wedges.

The belt reads in values from the two corresponding ultrasonic sensors to detect objects within the lower region. Because these sensors are angled downward by 40°, the distance typically read by these sensors is the distance to a point on the ground ahead. The device is calibrated within the first 5 seconds and calculates 5 different vibration intensity levels based on the distance from the user’s waist to the ground. The belt module also receives the data from the two sensors on the glasses. Four different motors are attached to the belt to vibrate based on which sensor detected an object: two motors in one row corresponding to the glasses ultrasonic sensors and two more motors in a row directly under the former corresponding to the belt ultrasonic sensors. As an object gets nearer, the intensity of the haptic feedback increases. The haptic feedback vibration pattern is also different based on whether the feedback is coming from the sensors on the glasses or the belt.

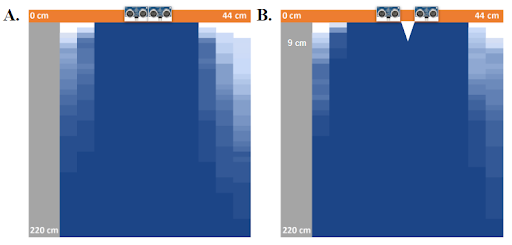

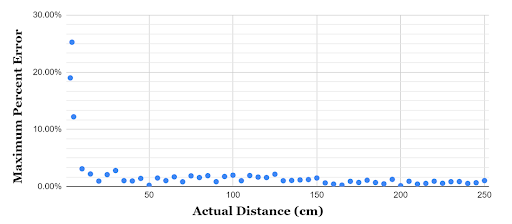

Accuracy

The field of view and blind spot of two ultrasonic sensors were measured at two sensor configurations: 0 cm and 5 cm apart. Given the size of the glasses, 5 cm is the maximum possible distance apart, while 0 cm is the minimum. At both of these configurations, a flat box (16 cm x 5.7 cm x 1.5 cm) was used to determine the degree of visibility of objects at various points. It was shown that the field of view was maximized at 5 cm apart. The 5 cm apart configuration resulted in a triangular blindspot between the sensors that extends out 9 cm. This blindspot is not large enough to interfere with the practical applications of our device, as objects that a user might encounter outside are likely to a) pass through a visible range before falling within the blindspot or b) be large enough so as to fall partially within and partially without of the blindspot. With this information in mind, the 5 cm configuration was chosen for the glasses in order to maximize field of view.

The accuracy of the sensor’s distance measurement was determined by comparing the distance in cm that was detected by the sensor to the actual measured distance of the object. Beyond 5 cm, the sensors were found to have a maximum percent error of 3.10%, with the sensor becoming more accurate at further distances.

Arrhythmia Detection

Problem Statement

Cardiovascular diseases, including cardiac arrhythmias, are a major health issue in low income countries, such as those in sub-Saharan Africa. Due to the lack of proper diagnostic equipment, non-communicable diseases (NCDs) are the second leading cause of death in these parts of the world, accounting for nearly 35% of all annual deaths. Lack of access to hospitals with cardiac resources and trained personnel to diagnose cardiac arrhythmias are key contributing factors to the high death toll that cardiac diseases cause in these areas. The average cost of an electrocardiogram (ECG) in Cameroon is $180, which is 2.7 times higher than the average minimum monthly wage there. Furthermore, there are large populations of rural communities who may not have transportation capabilities to get the healthcare they need. This leaves hundreds of millions of people without access to the basic arrhythmia detection that is considered standard in most parts of the world.

In order to combat this problem, we are aiming to develop a low-cost and accessible method of cardiac arrhythmia detection that would address the needs of these communities. We propose a solution which consists of two parts: 1) a mobile app which uses a novel machine learning algorithm to detect cardiac arrhythmia and 2) a wearable device which utilizes an optical pulse sensor to collect data that can be converted into ECG data. The device will be designed such that patients may use it as an alternative to a Holter monitor, which is commonly used to record ECG data for long periods of time. From the ECG data collected by the device, the algorithm will be able to detect multiple types of arrhythmia.

Implementation

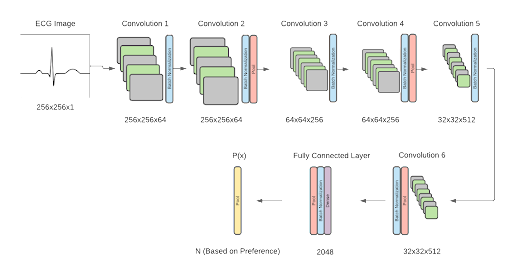

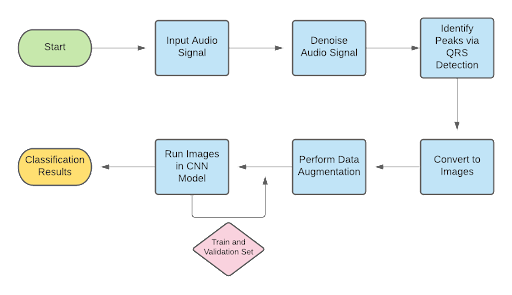

Neural Network

In order to categorize the patient’s ECG as normal or abnormal, a binary algorithm was created. The normal category indicates that the patient heartbeat is healthy and falls within the normal length between QRS indices, and the abnormal data tells the user that they may have a type of cardiac arrhythmia. The type of abnormality can further be specified by extending the algorithm to a multi-classification model, with 7 types of abnormalities defined (Premature Atrial Contraction, Premature Ventricular Contraction, Paced Beat, Right Bundle Branch Block Beat, Left Bundle Branch Block Beat, Ventricular Flutter Wave Beat, and Ventricular Escape Beat). The framework for the software was designed as shown below. The model structure utilizes a convolutional neural network algorithm, adapted from Jun’s ECG arrhythmia classification. The proposed binary classification model yielded an accuracy of 99.06%. Initial tests on multi classification of heartbeats using the proposed architecture yielded an accuracy of 98.95% accuracy.

Device

As a cheaper alternative to an ECG machine, an optical pulse sensor was used as the sensor to take heartbeat measurements for the prototype. An optical pulse sensor can collect pulse data which is then converted to an ECG signal using a process that has previously been used to convert piezoelectric sensor data to ECG data. An initial prototype is shown below.

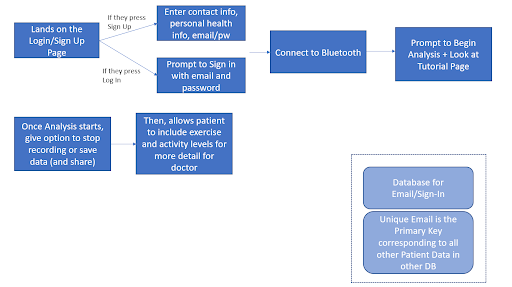

Mobile App

The designed algorithm was housed in the form of an app that would allow patients to easily access the features of the device and algorithm. The figure below represents the flow chart for the app.

Malaria Detection

Problem Statement

Malaria is a disease caused by parasites transmitted through bites from female mosquitoes. Symptoms of malaria include fever, vomiting, and even death in severe cases. There were an estimated 228 million malaria cases worldwide in 2018, with an estimated death toll of 405,000 people. Malaria has a strong presence in developing countries, especially within regions of Africa where 93% and 94% of total malaria cases and deaths occurred, respectively. The World Health Organization (WHO) suggests that “prompt diagnosis and treatment is the most effective way to prevent a mild case of malaria from developing into severe disease and death.” However, the WHO reports that a considerable proportion of children who exhibited symptoms of a fever (me dian 36%) did not seek any medical attention whatsoever, listing poor access to healthcare and ignorance of malaria symptoms as notable contributing factors.

The diagnosis of malaria from blood smears usually requires a highly skilled technician to count and classify red blood cells. The task of counting infected blood cells and calculating infection rates are better left to computers than to humans, especially since most developing countries already have microscopic technologies available but lack the required resources for training and educating technicians to interpret blood smears properly. To alleviate the impact of healthcare worker shortages in developing countries, we created a machine learning-based software to help automate these two tasks. With our approach, operating microscopes and performing blood smear stains are still required of clinicians, but the technical burden of interpreting images and counting infected cells are offloaded to computers. In turn, places that once lacked trained technicians can now benefit from more screening sites due to the reduced need for specialization. Clinicians can focus on treating patients rather than deciphering blood smears and allow technology to streamline the malaria diagnosis process.

Implementation

Training and Results

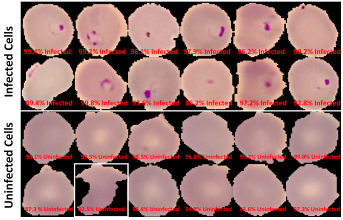

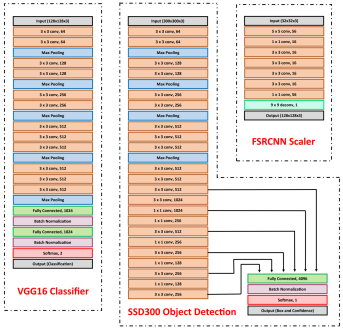

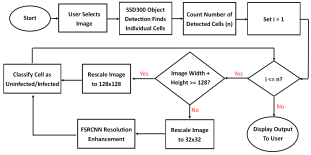

Our model consists of three neural networks that process the blood smear sequentially: an object detection model, a resolution enhancement model, and a cell classification model.

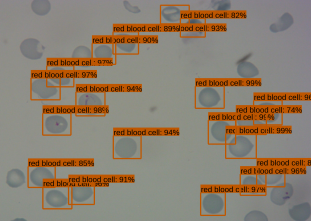

The object detection model consists of a pre-trained single shot multibox detector with an image input size of 300×300 (SSD300). The SSD300 object detection model is able to detect the presence of red blood cells with an average precision of 90.4% when the Intersection over Union (IoU) is 0.50 for all area sizes

The resolution enhancement model is a faster super-resolution convolutional neural network (FSRCNN) with four mapping layers, a high-resolution dimension of 56, and a low-resolution dimension of 16. The FSRCNN model takes in low resolution 32×32 images and artificially enhances it to high resolution 128×128 images to maximize the amount of detail to be analyzed by subsequent classification. The best performing FSRCNN has a peak signal-to-noise ratio (PSNR) of 30.79 and a mean squared errror (MSE) of 54.66. In contrast, the traditional method of bicubic interpolation yielded a PSNR of 24.10 and a MSE of 254.67. In addition, the FSRCNN-derived images are classified more accurately than the raw low resolution images or bicubic interpolated images in the finalized CNN classification model

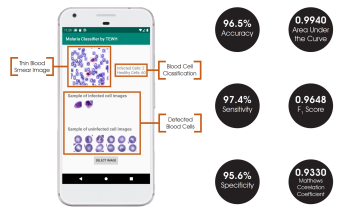

The final classification model is a variant of the VGG16 neural network, with batch normalization layers implement after each dense layer prior to passing through the activation function. Each dense layer consisted of 1024 nodes instead of the standard 4096 nodes to minimize computational burden. Our final VGG16 classifier model had an accuracy of 96.5%, sensitivity of 97.4%, specificity of 95.6%, and AUC of 0.9940. The full architectural details of all three models is shown below.

App Development

While all models were developed and trained with the TensorFlow and Keras packages, the final model deployments are subsequently converted into a .tflite file that allows the models to be run on TensorFlow Lite. TensorFlow Lite is an open-source platform focused on on-device model inference. Unlike previously reported studies that utilize phone apps for model prediction, this allows the models to run directly on the Android-based smartphones rather than relying on cloud-based computing resources.

The Android app takes in a user-selected image of a Giemsa-stained thin blood smear. Then, the SSD300 model isolates individual images of the red blood cells and discards images of white blood cells. if the individual images are of low resolution, the FSRCNN model enhances the resolution. Finally, each red blood cell image is classified by the VGG16 model to count the number of uninfected and infected red blood cells, as shown in Figure 2. Lastly, the file size of the SSD300, FSRCNN, and VGG16 models are 95 MB, 5 MB, and 20 MB, respectively, which is lower than the 200 MB file size limit. A summary of our data pipeline is depicted below.

Patient Health Monitor

Problem Statement

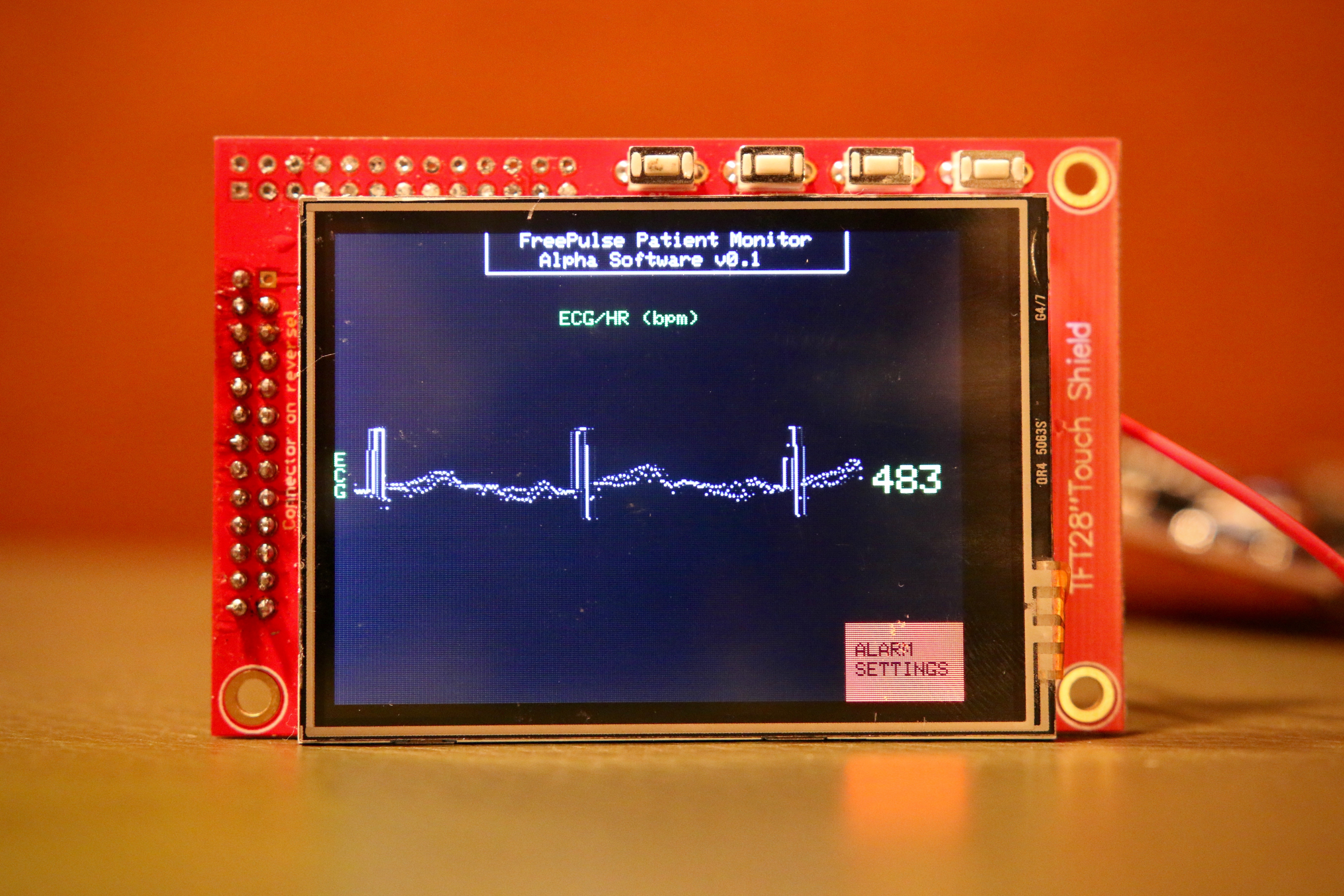

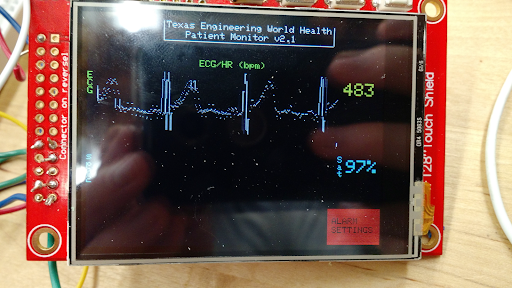

A patient monitor is a mechanical and electrical medical device designed to continually monitor a patient’s vital signs in order to identify respiratory or cardiac distress. Patient monitors play a crucial role in virtually all aspects of medical care, including emergency and surgical units, intensive and critical care units, and other non-critical units. Patient monitors are a staple item in developed world hospitals; however, in crowded developing world hospitals, a low patient-monitor-to-patient ratio prevents doctors from performing a sucient number of surgeries to meet the needs of the local population as well as effectively treat patients in critical care. The reason for this shortage of patient monitors can be primarily attributed to the cost of current market models, ranging anywhere from $1,000 to $10,000. This high cost forces developing hospitals to largely depend on outside donations for new devices, an infrequent and unreliable source of critical equipment. There is a clear and demonstrated need for a low-cost patient monitor that can be purchased in bulk by developing world hospitals, thus giving many more patients the monitoring they need. Our project, the FreePulse low-cost patient monitor, aims to fill this gap in developing world healthcare and provide a durable, reliable, and accessible monitor. FreePulse provides basic monitoring for heart rate, electrocardiogram signals (ECG), and percent saturated oxygen (SpO2) with analog and digital filtering. Visual alerts are implemented to warn nurses if a patient begins to experience cardiac or respiratory distress, matching the capability of current market devices. A replaceable backup battery and uninterrupted power circuit ensures that the device continues to function even in unstable power conditions.

Implementation

The FreePulse patient monitor is designed with the developing world in mind. Its portable and durable casing and design makes it an excellent fit for a wide variety of medical applications.

Material

A sliding latch with an O-ring seal is one of the key features that contribute to the durability of the FreePulse monitor. The O-ring makes the monitor and interior circuit components waterproof and improves the durability of the monitor. Additionally, the latch allows easy access to these interior components, thus eliminating the need for screws in the FreePulse design. This lack of screws is a major plus for use in the developing world due to the tendency of screws to strip or break under stress. Another design choice that improves the durability of the FreePulse monitor is the use of ABS plastics on the exterior. The use of ABS plastics make the FreePulse casing very resistant to chemical corrosion, increasing the device’s overall life span. Finally, the production model of FreePulse will include resin-coated electrical components, greatly increasing the durability and life span of the monitor. As a result of the resin coating on our electrical components, fluctuations in humidity will have negligible effects on the circuit components. This feature combined with the O-ring design provides a fail-safe mechanism for protection from accidental water exposure.

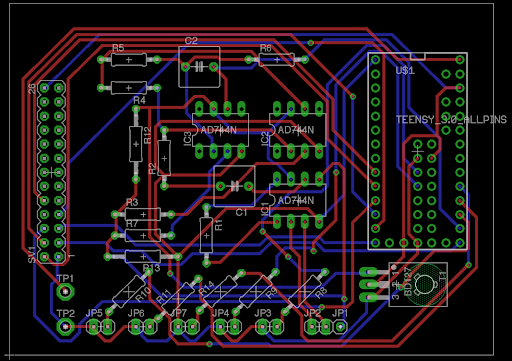

Circuitry

The Teensy microcontroller is the heart of the FreePulse circuit. Its low cost coupled with its 74 MHz processing speed gives FreePulse the sampling rate of a state-of-the-art monitor without the price point. This processing power is used to manage the sampling rates for both the ECG and SpO2 probes as well as run the display and power all of the connected peripheral circuits. Furthermore, low-cost miniaturized voltage regulators are used to run the entire circuit from a single voltage source despite differing components and even differing grounds. The ECG circuit uses an active low-pass filter to amplify physiological signals up to 5000 times, while both passive and active filters were used to reject noise and extract a clear waveform to display on the screen. The lithium ion battery in FreePulse has a capacity of 1200 mAh; we also have measured it to have an average power draw of 185 mA with all LEDs illuminated and probes running, meaning the system can run for approximately 6 hours on a single charge. Overall, these disparate hardware components communicate smoothly through the GPIO pins of the Teensy microcontroller, producing a quality signal that rivals the functionality of current market competitors, but at a fraction of the cost.

Testing

Currently, the monitor supports SpO2 and ECG readings. However, the same circuit can be expanded to monitoring muscle activation, analyzing patient temperature fluctuations, and body imaging capabilities. This extensible design allows for damaged modules to be swapped out easily and effectively. It also allows seamless hardware upgrades, preventing the user from having to replace the entire system. This key feature of the FreePulse monitor reduces waste and emphasizes sustainability.

ECG Acquisition

The most complex hardware component of our design is the filtering and amplification circuit for an ECG signal. Critical to the success of signal acquisition is a sampling rate that allows for clear visualization of the input signal; we have set the sampling rate at a controlled 121 Hz to achieve optimal signal clarity while retaining a smooth and responsive user interface.

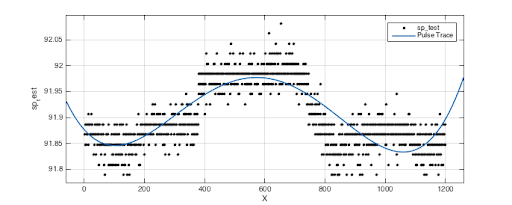

Capillary Oxygen Saturation

Our current prototype utilizes both visible and IR LEDs paired with phototransistors to measure the amount of light transmitted through a user’s tissue. The LEDs and diodes are housed in a plastic case similar in form factor to traditional finger clip pulse oximeters, and the output of the photo transistors is filtered by a passive low-pass filter. We are currently still developing our calibration algorithm for calculating oxygen saturation from this curve, as it is a very hardware-dependent process; however, initial signal processing is already showing a clear view of the transmittance waveform.