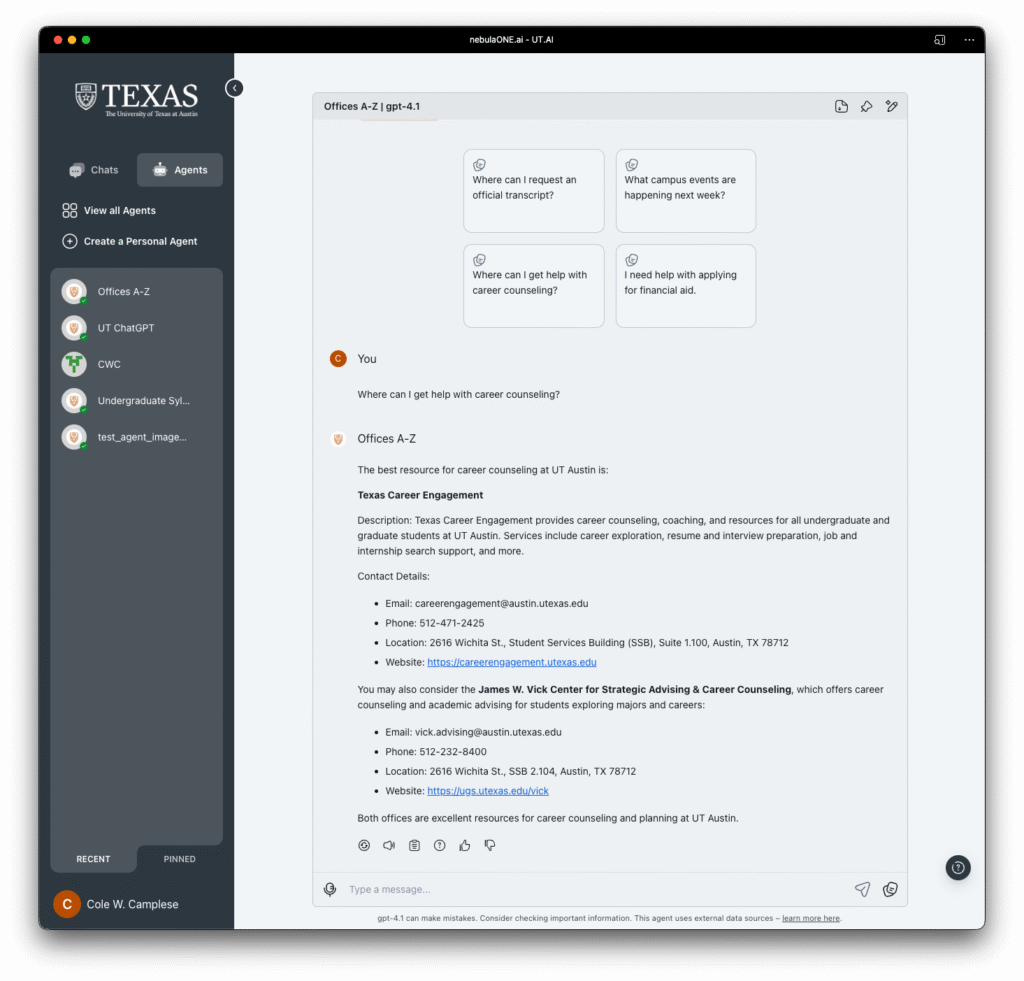

Part of our campus AI journey is to design and deploy AI agents that can utilize key information from exisiting websites across campus. These agents may replace the sites, reducing technical bloat and information drift. While doing so, an unexpected benefit has emerged, one that speaks volumes about the evolving relationship between technology and content strategy on a highly decentralized campus.

When we first set out to build these agents, we did what most teams do, we pointed them at our sites or their underlying data, ingested the knowledge, tested retrieval, and began crafting conversations. But something interesting happened when we put these agents to work. People have started asking for things we couldn’t give them.

In short, the agents began surfacing questions we hadn’t anticipated; questions students, faculty, staff, and prospective Longhorns are likely asking every day. And, just as importantly, they showed us where our data and content fell short.

They have become mirrors, reflecting the structure, and the fragmentation, of our institutional knowledge. The things they cannot answer point directly to gaps in the content architecture: outdated FAQs, scattered documentation, siloed policy pages, and even buried gems of information lost in PDF archives or legacy web systems. It’s not that the information doesn’t exist. It’s that it’s too hard to find, inconsistently written, or lacks the context necessary to form a coherent response. We are sure the agent isn’t making mistakes per say, it tells us what it can’t say, and that’s been incredibly valuable.

One of the more revealing moments for me came when we began evaluating how the agent performed with the A–Z directory. This is a resource that has long served as the backbone for finding services and offices across the university. But once we put the agent to work with this data, the limitations of that system became painfully clear. What we had assumed was structured, complete, and reliable turned out to be limited, outdated, and in some cases, misleading.

This has been a bit of a wake-up call. It is so tempting to take a “lift and shift” approach, move what we have on the web into the AI agent and assume it will just work. But that does not hold up. The agent exposes what the web often hides. It forces precision. It requires context. And it absolutely demands trust in the data that fuels it.

We are now integrating these insights into a more systematic approach. Each time a query breaks down, we want to trace it back. What we need to be asking centers on: Should this information exist? If so, where should it live? Can we make it easier to find, easier to understand, and easier for the agent to serve up confidently?

This work is not just about making our AI better. It’s about making our websites more accessible, our documentation more useful, and our services more responsive. Every gap we close improves the experience not just for the agent, but for the human trying to find their way. I didn’t expect this kind of feedback loop to emerge so quickly, but I’m glad it has. It reminds us to slow down, look closely, and be intentional, not just with how we build agents, but how we steward the information we share across this institution.

This post hits the hardest for me. The state of data and information on our campus has been the biggest ah-ha moment for me as we continue to roll out AI capabilities across campus. Listening to the questions that come up in the Copilot trainings over the past several months, it’s clear that one of the main blockers to unlocking the power of AI is the state of our data and information. If anything, AI has opened the door to having these conversations and improving the experience for all. I’m in aww at the appetite and momentum to do better, to be better. The AI Studio is really looking forward to helping and that starts with “eating our own queso”. Enterprise Technology…you’re first!

The overwhelming challenge will be data and not the “source of truth” in financials, student, and people ERP systems. Those data are currently piped into the data platform. While we are still looking to unlock that with AI, the information that concerns me is the vast majority of public facing content we have spread across the UT web eco-system. Thousands of sites all managed by different people, at differing levels of oversight, and differing levels of accuracy. If we start to unlock that problem we could do several things at once, delight he community with accurate information, reduce the rosk profile associated with web content, and reduce our technical debt.

I’m getting stuck on the quote “These agents may replace the sites”.

I don’t think websites are going anywhere. The web is evolving, yes. But it still exists for humans first and foremost, and so should AI. Chat interfaces and web browsing serve different purposes. For many tasks, I still prefer the clarity and control of a well-designed website. But for an increasing number of use cases, tools like UT Spark and ChatGPT are becoming indispensable. Their capabilities are growing fast, and so is their usefulness.

Some tasks I haven’t fully decided which mode of interaction I prefer. For example, reading technical documentation. I like being able to take my time reading through docs and being presented with everything that repo has available. Often times I am learning things I was not searching for etc. Same goes for certain websites within UT.

Whereas when a service goes down and folks are waiting on a fix, I would rather chat with an llm that has access to those docs, web search, and a stackoverflow MCP to help me synthesize relevant information at speed rather than reading through docs or logs on my own.

Give users the choice of how they want to interact with and experience content. I also see power in complementary services. Can a website and an agent exist in harmony? A specialized agent within a website is also a pattern gaining popularity as AI services start to become baked into the products, webapps, sites, and services we use every day. I actually can see users visiting our UT websites more than ever as agents link out to them within responses and list website urls as references. They go hand in hand.

“I don’t think websites are going anywhere.” — Cody Antunez, 2025

I love it! Well, I didn’t see having to go to my high school guidance counselor to get a paper-based college application would go away either. The guidance counselor was the critical link, right? Turns out, the audience went where the information went next and that movement matched the modality of the current social context. Keep in mind that time only moves in one direction when it comes to both technology and expectations. They go up and the right. This is how disruption of innovation theory works.

I don’t argue that websites aren’t going anywhere anytime soon. But, just like the 10 years where you could still get that application in your guidance counselor’s office, we are charting the course for where the experience and the expectations are going. Leadership in a space is about saying we recognize the things we did were amazing, but what is done is done. The digital herd is moving as I type. They are moving to where the market is taking them, AI search, AI shopping, AI tutoring in schools. Those trends inform our decisions. The consumer market always precedes ours, but when the expectations happen, they happen without warning … unless you are watching.

We are not talking about killing website. We are talking about reducing clutter and noise. We aren’t talking about reducing the value of the web, we are talking about finally taking it serious. We aren’t talking about the death to democratized information, we are talking about the ultimate way to find the truth in our environment. All that to say, you are right, the web isn’t going anywhere today, but watch out for tomorrow.

I really appreciate this insight into the stumbling blocks of implementing a system like the one you’re rolling out. One of the most difficult tasks of hosting websites is keeping the information updated. So your discovery may in fact be a blessing in disguise. If ChatGPT can help pinpoint where critical data are missing, misleading, or outdated, sites can be updated or pruned, which in turn can then inform your chat interactions. The next challenge is identifying the site owners/editors who have the responsibility and access to update these pages! And of course, setting up processes to keep that data fresh. Thanks for this peek at the process.

This is interesting and it confirms what I think most people in Higher Ed IT know: that our internal silos, constant internal reorganizations, legacy systems, abandoned sites, staff turnover, and “follow the bouncing ball” to the new shiny thing in technology (MOOCs!) and “whack-a-mole” approach to solving problems (out of necessity mostly) — rather than any ability to strategize around our information — have left that information in a rather pathetic state. And AI is just revealing that.

In some sense, the curators and librarians of our information are the most important roles moving forward. AI built on a foundation of crap information will return crap results. The early www days were really the wild, wild, west. (Funny how that lines up.) Every department was spinning up a web server and hopefully had someone who wanted to learn html. And, in so many cases, we are still curating information that way.

We don’t build a world class art museum and ask everyone to drop off the paintings they like most. Because one person’s Van Gogh is another person’s velvet clown with a tear. And while little free libraries are awesome, that’s not the manner in which we can curate our institutional knowledge moving forward. And in many ways, the Chief Information Officer is the Chief Curator of all of the pieces that come together to form the collective knowledge of UT. And if anyone in higher education wants to really utilize the power of AI to advance collective knowledge, we probably need to think about it starting one website at a time and finally strategize about our informational assets. It’s our primary product in higher education. And it’s way overdue.

And as I think on it more, it is about Information Standards. We have spent a lot of time creating style guides and making sure everyone is putting the logo marks in the right place, using the correct font, and picking the right shade of burnt orange (Nittany Lion Blue, in my case). But what have we ever done to ensure that our web-based information is actually good? Or helpful? Or serving a purpose? AI is revealing the problem, but it also has the power to craft the solution.