The Sendai Framework for Disaster Risk Reduction is the Hyogo Framework (2005-2015)’s successor agreement. It was adopted in 2015 and aims to substantially reduce the “disaster risk and losses in lives, livelihoods and health and in the economic, physical, social, cultural and environmental assets of persons, businesses, communities and countries.” However, a closer look at the way the overall score from one its targets is calculated, suggests that there might be an underlying coding error.

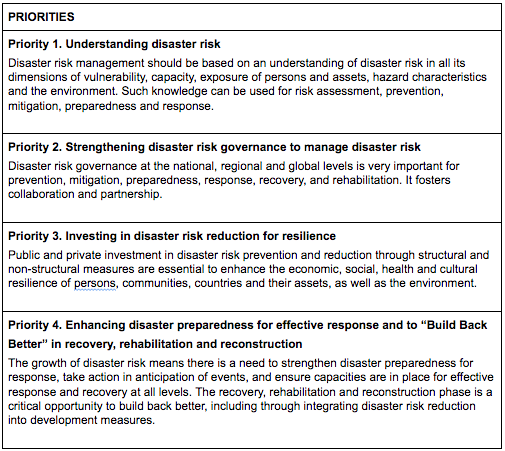

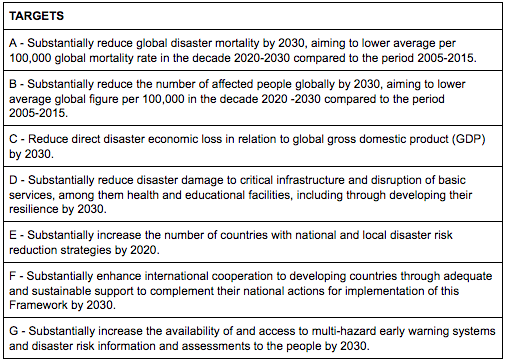

By setting clear targets and deliverables for the year 2030, the Sendai Framework focuses primarily on engaging communities and governments to build resilience. Overall, it has seven targets and four priorities:

In 2017, the United Nations Office for Disaster Risk Reduction (UNDRR, formerly known as UNISDR) published technical guidelines to help countries and stakeholders compute the relevant targets and report their yearly improvement on the Sendai Mentor. Similarly, the UNDRR launched in 2019 an e-learning program to coach stakeholders on how to use the platform. The scores must be reported on a yearly basis using the year 2015 a baseline year for comparison.

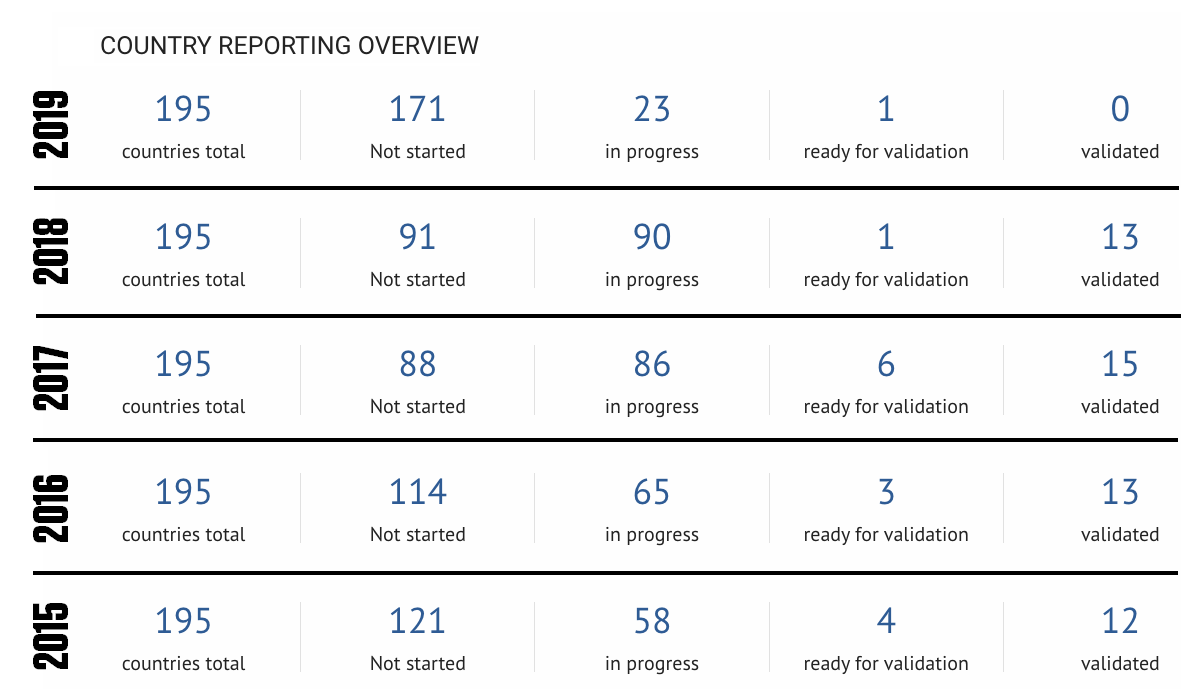

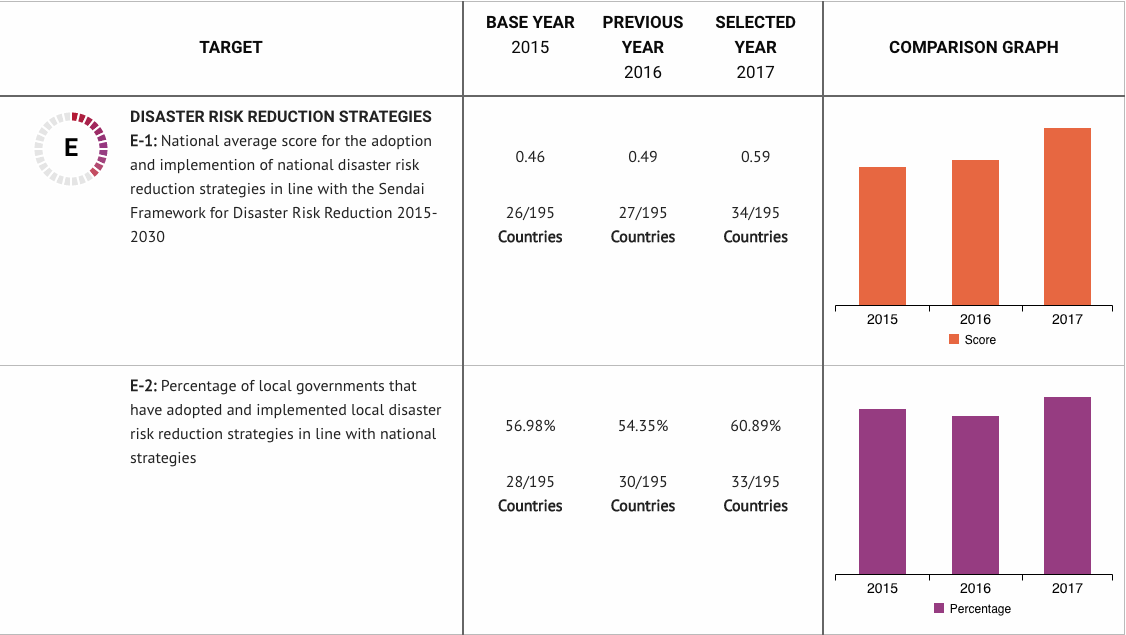

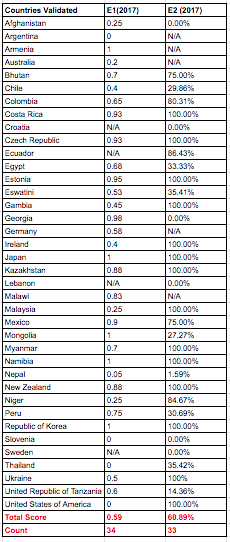

However as shown in figure 3, less than 7.5% of all countries have had their scores validated on the platform; the majority of countries have not even started reporting yet.

The low number of countries engaging with the platform makes it hard to use the platform’s scores in order to establish a global comparative analysis that is reflective of reality. However, for the purpose of Disaster Risk Preparedness in Oceania specifically, we will focus on the countries who have reported on Target E to try to understand how their scores were computed.

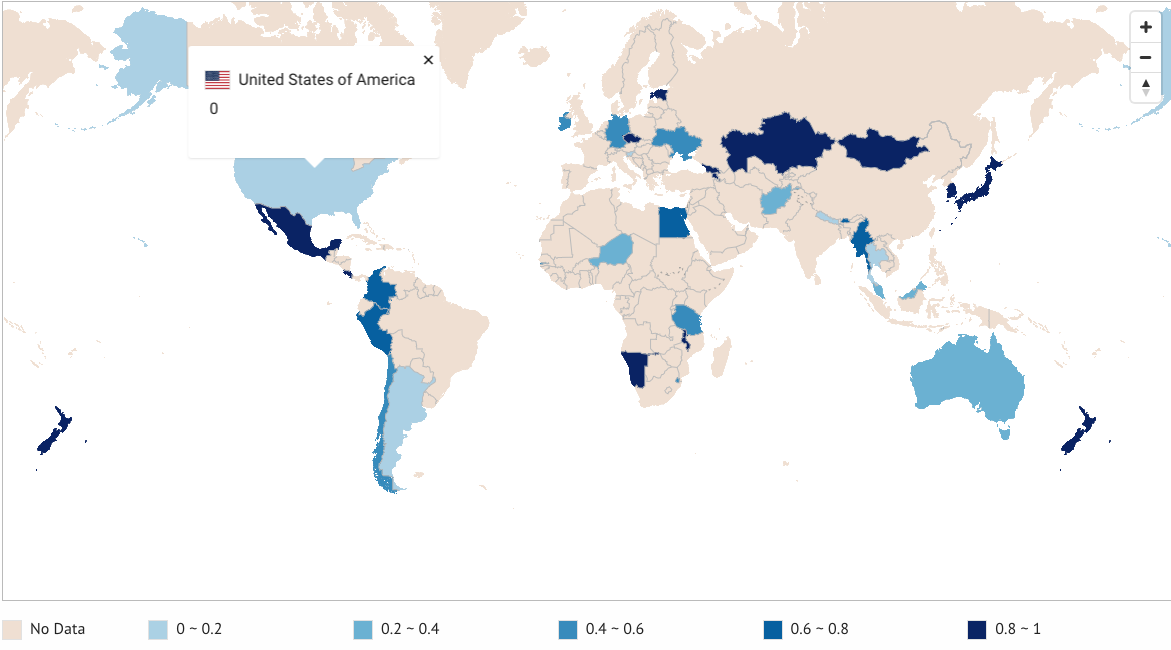

Target E

Target E is a measure that aims to increase the number of countries and local actors engaging in proper Disaster Risk Reduction (DRR). Its goal is to: “Substantially increase the number of countries with national and local disaster risk reduction strategies by 2020.”

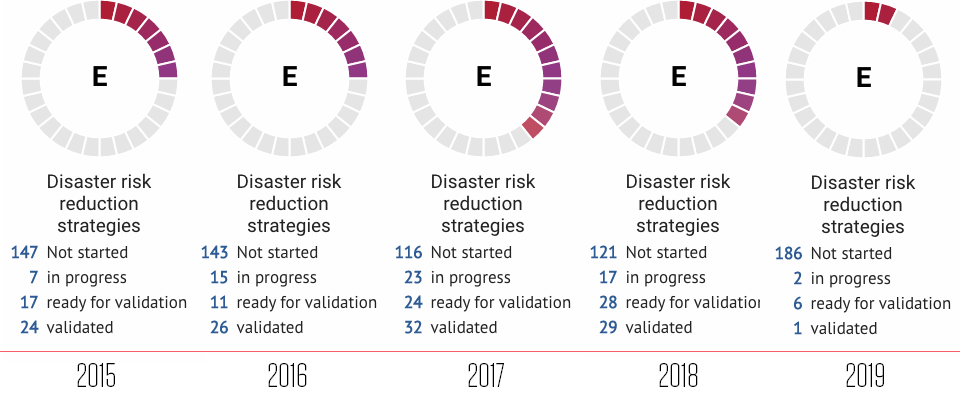

Sendai has set up a cut-off point in the year 2020 for Target E only to urge countries to develop a DRR strategy first and then have enough time to attain the remaining targets by 2030. However, as of 2019, a maximum of 16% of the countries had their scores validated; the large majority did not even start reporting.

Out of the countries reporting on Target E (34 countries in total), less than 60% (figure 5) have engaged in proper DRR planning on a national level in 2017 and 61% on a local level. That means, out of 195 countries we are sure that around 21 countries only have a sound DRR strategy in place and 13 do not. There is no sure data on the remaining 161 countries.

By working my way around the platform and comparing the scores to the maps projected, I was able to find all the scores reported for Target E. This task would have been a lot easier if the platform provided a list of scores for Target E per country. Instead, the platform provides a color-coded map and the ability to search for the score of a specific country. Below is the list of scores for sub-targets E1 and E2 for the year 2017:

The scores I was able to reach matched the platform’s final scores (final two rows) which confirms the list:

- The total score for the 34 countries that have validated their scores for E1 is 0.59

- The total score for the 33 countries that have validated their scores for E2 is 60.89%

But a closer look suggests that there might be a problem with the way the final score was computed.

The platform assumes a score of “0” for some countries were data was actually unavailable (as per the same platform) but does not do the same for others.

For example:

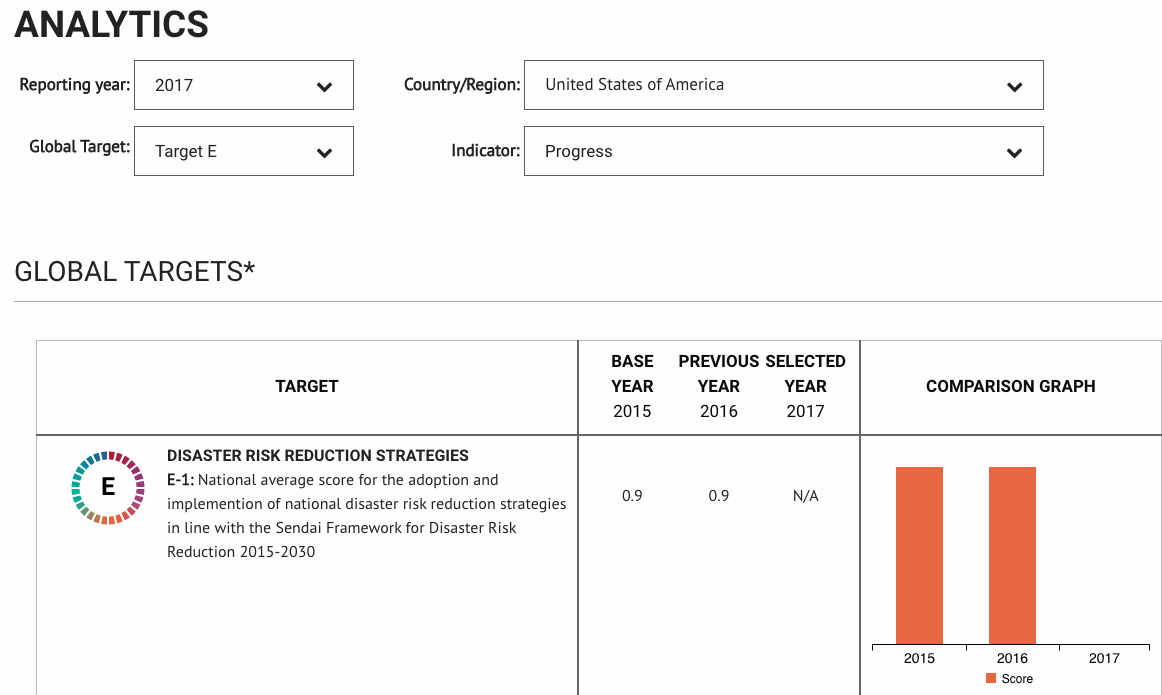

For E1: Argentina, Slovenia, Thailand, and the United States were given a score of 0.

Whereas if you search for the countries separately, the same platform suggests that data on E1 for said countries is unavailable:

Conversely, for the remaining countries where scores are similarly unavailable, the country was not counted in the final score. Namely for: Croatia, Ecuador, Lebanon, and Sweden.

Similarly, in calculating E2, Afghanistan, Croatia, Lebanon, Slovenia, and Sweden were given a score of 0% for E2 when there was actually no score reported. While Argentina, Armenia, Australia, Germany, and Malawi were omitted from the final count.

Projecting a score of zero when data is unavailable is harming the platform in two ways:

- It is assuming there are more countries validated for Target E when in fact there is only 30 validated for E1 (not 34) and 28 for E2 (not 33).

- It is inadvertently skewing the total score negatively. If we were to assume a “N/A” score instead of “0” for countries with unavailable data, the total scores for E1 and E2 would become:

| E1 | E2 | |

| Total Score (old) | 0.59 | 60.89% |

| Count (old) | 34 | 33 |

| Total Score (new) | 0.674 | 72% |

| Count (new) | 30 | 28 |

| Percent Increase | 13% | 18% |

Not to mention that counting “zero” for the United States for example tremendously skews the final score negatively since the United States had a high score of 0.9 for E1 for all previous years.

All in all, errors happen all the time. But on a bigger scale, if this particular error is not rectified, the overall scores would be extremely off. Unless there is an alternative explanation to these computations, the Sendai Monitor must correct the back-end for the year 2017 and make sure that the same is not happening for other years.