Design of human-centered robots

Since 2011 we’ve been designing human-centered robots. Starting with high performance series elastic actuators, to helping NASA design the Valkyrie humanoid robot to our newest and soon to be announced DRACO liquid-cooled viscoelastic biped.

Whole-Body Control architectures

.

.

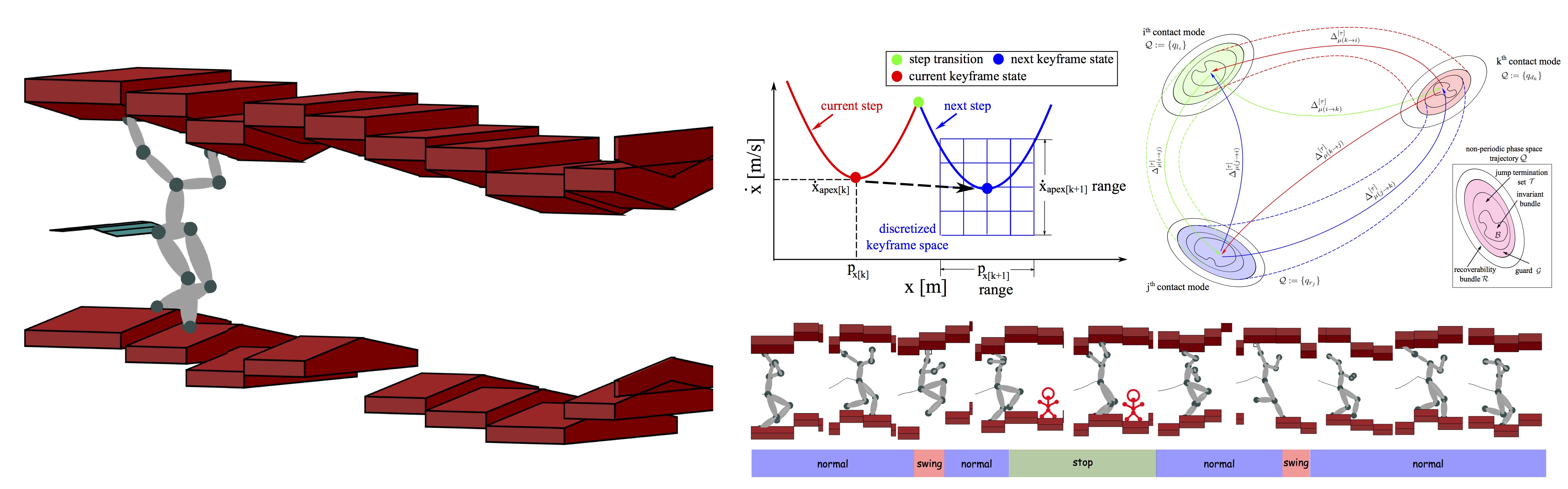

Dynamic locomotion

Integration of high and low level planning and control

Our objective in this research is to develop 3D foot placement planners in rough terrains while being robust to perturbations. We employ techniques such as phase space motion planning, dynamic programming, and linear temporal logic to produce sophisticated biped and multi-contact legged behaviors in cluttered environments.

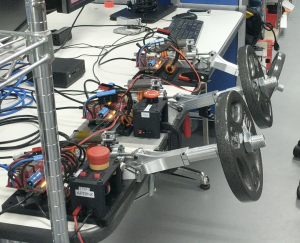

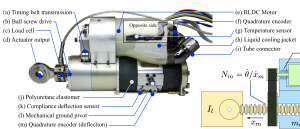

Design of High Performance Actuators

We investigate lightweight high performance actuators. We explore mechanical, electrical and control design techniques to maximize power and efficiency while minimizing the size and weight. Some of the applications are in the areas of legged robots and rehabilitation devices.

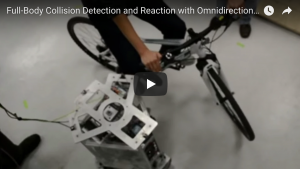

Whole-Body Contact Awareness and Safety

We develop estimation and control methods for quickly reacting to collisions between omnidirectional mobile platforms and their environment. To enable the full-body detection of external forces, we use torque sensors located in the robot’s drivetrain. Our goal for this research is to achieve 99.99% safety of humans in close proximity with collaborative humanoid robots. The general approach is to create and implement theories that enables the robot to (a) recognize the intent of the human, (b) generate intelligent action plans for fast supportive responses, and (c) compliantly react under unintended physical collisions. Validation of our theories is done on two robotic platforms.

Cloud Based Robotics Laboratory

In this line of research we explore the access to the controller stack of humanoid robots using a web browser and a web framework. The idea is to connect multiple nodes to a hardware asset to program, control, and perform experiments with robots and cloud-based laboratory equipment.

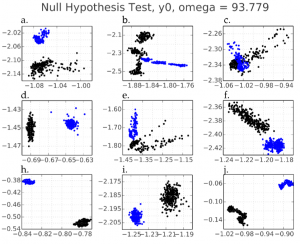

Robust Modeling of Human-Centered Systems

We investigate the use of frequency domain identification for obtaining robust models of series elastic actuators. This early work focuses on identifying a lower bound on the H∞ uncertainty, based on the non-linear behavior of the plant when identified under different conditions. An antagonistic testing apparatus allows the identification of the full two input, two output system. The aim of this work is to find a model which explains all the observed test results, despite physical non-linearity. The approach guarantees that a robust model includes all previously measured behaviors, and thus predicts the stability of never-before-tested controllers.

Output phasors against linear model predictions for randomly generated condition groups plotted

.

.

.

.